By Geek Park

I believe that everyone has used this sentence to make excuses for themselves when they are “catching fish” or “lying flat”.

From the industrial revolution of the steam engine to the digital revolution of computers, technological progress has indeed given humans more and more capital in some aspects.

As the most potential next-generation platform, will AI technology make humans “lazier”?

It seems that it is, but it’s not good news.

According to a new study published in the journal “Frontiers of Robotics and Artificial Intelligence”, when humans cooperate with AI and machines, they really become “lazy”.

“Teamwork can be both a blessing and a curse,” said Cymek, the study’s lead author.

So, in the AI era, the biggest crisis for mankind is not being replaced by machines, but being “too lazy to degenerate”?

01. Machine assistants allow humans to “relax their vigilance”

When there is such a powerful helper as a machine, humans will become more “big-hearted”.

Researchers at the Technical University of Berlin in Germany provided 42 participants with blurry images of circuit boards and asked them to inspect them for defects. Half of the participants were told that the circuit boards they were working on had been inspected by a robot called Panda and had flagged defects.

In fact, the robot “Panda” detected 94.8% of defects during the experiment. All participants saw the same 320 scanned circuit board images, and when the researchers took a closer look at the participants’ error rates, they found that those who worked with Panda caught fewer defects later in the task. Because they have seen “Panda” successfully flag many defects.

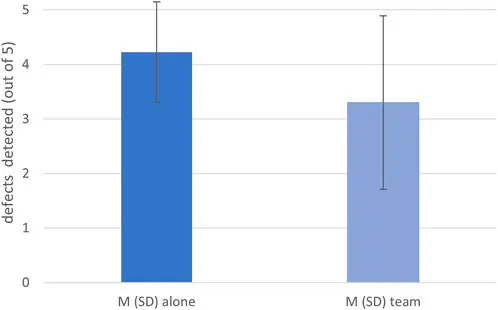

Participants in both groups examined nearly the entire board surface, spent time searching, and rated their effort higher. The results were that participants who collaborated with the robot found an average of 3.3 defects, and those who completed the task alone found an average of 4.23 defects.

“This suggests that participants may be less attentive in inspecting circuit boards when working with a robotic partner,” the study said. “Participants in our study seemed to maintain effort in inspecting circuit boards, but it appeared that inspection was performed with less mental effort and less effort on the part of the examiner.” It was carried out with the attention of sampling information.”

This means that if they are told that the robot has inspected a part and experienced the robot’s reliability, they will find fewer defects. Subconsciously, they assume that “Pandas” are less likely to miss defects, creating a “social loafing” effect.

The implications of this research are particularly important for industries that rely on rigorous quality control. The authors warn that even a brief relaxation of human attention, perhaps due to an overreliance on robot accuracy, could compromise safety.

“Loss of motivation tends to be greater during longer shifts, when tasks become routine, and the work environment provides less performance monitoring and feedback. This is common in manufacturing, especially in manufacturing,” Onnasch said. This is in security-related areas where double-checking is common and can have a negative impact on work results.”

Of course, the researchers’ test also had some limitations. For example, the sample size is not large enough, and “social loafing” is difficult to simulate in the laboratory because participants know they are being watched. “The main limitation is the laboratory environment,” Cymek explains. “To understand the magnitude of the problem of loss of motivation in human-machine interaction, we need to get out of the laboratory and test our hypotheses in real work settings with experienced workers, who typically work with robots.”

02. The “crisis of human-machine cooperation” has already occurred

In fact, outside the laboratory, the “degeneration” caused by human-machine cooperation has already occurred in the real world.

In the field of autonomous driving, there is a phenomenon similar to “social loafing” called “Automation complacency”, which is typically caused by distraction due to automated assistance.

In March 2018, an Uber self-driving car with a safety officer hit and killed a cyclist in Arizona, USA. Police analysis found that if the safety officer had been looking at the road, the safety officer could have stopped 12.8 meters in front of the victim and avoided the tragedy.

Tesla is often the target of U.S. media and regulatory scrutiny, often due to accidents related to autonomous driving. A typical scenario is that a Tesla driver sleeps or plays a game while using Autopilot and is involved in a fatal crash.

In the current AI craze, the prediction that machines will replace humans is getting closer to reality. One side believes that machines will serve humans, while the other side believes that humans will accidentally create evil things.

In the medical field, the AI system “Doctor Watson” developed by IBM once gave unsafe medication recommendations to cancer patients. This year, a paper pointed out that generative AI can already pass three parts of the U.S. medical licensing exam. A similar migration hypothesis is that if AI diagnoses and treats humans in the future, and then human doctors take care of it, will human doctors suffer from the problems of “social loafing” and “automation complacency”?

READ ALSO: “In the Beginning” in Morocco

The authors of the aforementioned study point out: “Combining human and robot capabilities clearly offers many opportunities, but we should also consider the unintended group effects that can occur in human-robot teams. When humans and robots work on a task, this may lead to a loss of motivation among human teammates and make effects such as social loafing more likely to occur.”

There are also concerns that AI could impact human thinking and creativity weaken human relationships and distract from reality as a whole. The chatbot Pi launched by Silicon Valley’s generative AI star startup Inflection is designed to be a friendly and supportive companion. The founder said that Pi is a tool to help people cope with loneliness and can serve as a person to talk to. Critics say it provides an escape from reality rather than interaction with real humans.

Now, the relationship between people and tools has evolved to a new level. The birth of all tools has actually made humans lazy. For example, sweepers prevent people from cleaning houses, and mobile phones eliminate the need to write down phone numbers.

But the difference between AI technology and previous technologies is that more thinking and selection tasks are handed over to AI, while the latter is basically a black box, which is more like a transfer of thinking autonomy. When people completely hand over driving decisions to autonomous driving and hand over medical diagnoses to AI systems, the potential costs may be completely different from the costs of not remembering phone numbers.

Computer scientist Joseph Weissenbaum, who developed the first chatbot in history, once compared science to “an addictive drug” that becomes “a chronic poison” due to increasing doses, such as Introducing computers into some complex human activities may lead to a point of no return.

When people hand over the power of thinking and judgment to machines as a “reference”, the devils of “social loafing” and “automation complacency” may also be lurking inside, and may become a chronic poison as tasks are repeated.